Table Of Content

Hi, SEOs!

Big news from Google – they’ve incorporated robots.txt reports within the Google search console.

So, what’s the deal with this new report?

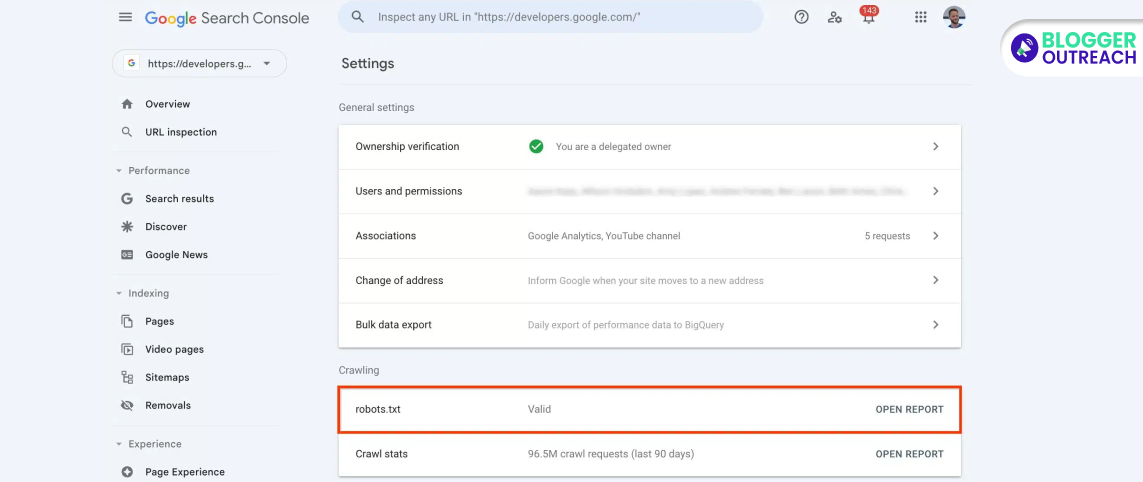

Firstly, you can find it under settings in Google Search Console.

Moreover, this report details the top 20 hosts on your site.

Hence, It will disclose the last time Google crawled those files.

Also, any warnings or errors it found.

Here Is What Google Has Said:

“The robots.txt report shows which robots.txt files Google found for the top 20 hosts on your site, the last time they were crawled, and any warnings or errors encountered. The report also enables you to request a recrawl of a robots.txt file for emergency situations.”

Now, here’s the cool part – if you’re in a tight spot and need a quick fix, the report lets you request an emergency recrawl of your robots.txt file. Google’s got your back!

But that’s not all. Google has generously sprinkled robot-related info in the Page indexing report in the Search Console.

It’s like a double scoop of ice cream – more insights, more solutions. Speaking of solutions, Google also waved goodbye to the old robots.txt tester.

Curious About What It Looks Like?

Picture this: a neat report showing all the nitty-gritty details, accessible with just a few clicks. Simple, right?

Now, Why Should You Care About All This Robot Talk?

If you’re facing issues with indexing and crawling, this report could be your superhero. It gives you a peek into whether your robots.txt file is playing nice or causing mischief.

Take a few minutes to stroll through this report for the sites you manage in Google Search Console. It’s like a quick health check for your website’s accessibility.

Hold on!

Would you like to read the complete help document on the new robots.txt files? Here you go!

In summary, Google is simplifying things for you. Out with the old, in with the new – a smoother, more efficient way to handle your robots.txt. So, buckle up and explore the new report.